Andy Bell 1, Jennifer Kelly 2, Peter Lewis 3

Purpose:

Over the past two decades, the discipline of paramedicine has seen expediential growth as it moved from a work-based training model to an autonomous professional training model grounded in academia. With limited evidence-based literature examining assessment in paramedicine, this paper aims to describe student and academic views on the preference for the Objective Structured Clinical Examination (OSCE) as an assessment modality, the sufficiency of pre-OSCE instruction, and whether or not OSCEs' performance is a perceived indicator of clinical performance.

Design/Methods:

A voluntary, anonymous survey was conducted to examine the perception of the reliability and validity of the OSCE as an assessment tool by students sitting the examination and academics facilitating the assessment.

Findings:

This study’s results revealed that the more confident the students are in the assessment's reliability and validity, the more likely they perceive the assessment as an effective measure of their clinical performance. The perception of reliability and validity differs when acted upon by additional variables. The anxiety associated with the assessment and adequacy of performance feedback are cited as major influencers.

Research Implications:

This study’s findings indicate the need for further paramedicine discipline-specific research on assessment methodologies to determine best practice models for high-quality assessments.

Practical Implications:

The development of evidence-based best practice guidelines for assessing student paramedics should be of the utmost importance to a young, developing profession, such as paramedicine.

Originality/Value:

There is very little research in the discipline-specific area of paramedicine assessment, and discipline-specific education research is essential for professional growth.

Limitations:

The principal researcher was a faculty member of one of the institutions surveyed. However, all data was non-identifiable at the time of data collection.

Keywords: paramedic, paramedicine, Objective Structured Clinical Examinations, OSCE, education, assessment

1 University of Southern Queensland, Ipswich, Australia

2 School of Health and Biomedical Sciences, RMIT University, Melbourne, Australia

3 University of Queensland, School of Nursing, Midwifery and Social Work, Brisbane, Australia

Corresponding author:

Andy Bell, I Block, University of Southern Queensland, Ipswich Campus, 11

Salisbury Road, Ipswich QLD 4305, [email protected]

The Objective Structured Clinical Examination (OSCE) has been implemented and widely studied in health-related fields, such as nursing and medicine, for decades. However, this method of assessment has not been convincingly studied in the context of paramedicine. The discipline of paramedicine has developed rapidly over the past 20 years, and the pace of change is presenting various professional, procedural and andragogic challenges (O’Brien et al. 2014; Williams et al. 2009). For instance, there is a growing expectation that paramedics will provide a high level of care for emergency and non-emergency patients in high-pressure, time-critical environments immediately following graduation (O’Meara et al. 2017). Furthermore, the expectation of a paramedic’s level of clinical competence following program completion has grown significantly. Further, the accurate assessment and measurement of paramedic students’ clinical competence have become a challenging responsibility for those involved in their education (Tavares et al. 2013). This change in the educational environment has led to an increased emphasis on the quality of training and assessments that surround the clinical competence of paramedic students (O’Brien et al. 2014; Sheen, 2003).

A body of knowledge, based on research specific to paramedicine education, must emerge to improve paramedic assessment and curricula and develop growth as a discipline (Krishnan 2009; Williams et al. 2009). This paper addresses findings from a study conducted to examine student and academic perceptions of the reliability and validity of the OSCE format as an assessment tool for paramedic students. This paper aims to describe student and academic views on the preference for OSCE as an assessment modality, the sufficiency of pre-OSCE instruction, and whether OSCE performance is a perceived indicator of clinical performance. This paper also aims to determine if students believe OSCE-associated anxiety impedes their OSCE performance. The paper will address the following research questions to meet these aims:

How do paramedic students prefer the OSCE assessment compared to other assessment modalities used in a Bachelor’s of Paramedicine programme?

Do paramedic students believe OSCE-associated anxiety is a barrier for their performance in an OSCE assessment?

Is there a belief among students and academics that a students’ OSCE performance will reflect their clinical practice performance?

Do students and academics feel that pre-OSCE instruction is sufficient to assist students in their OSCE assessment?

Historically, the level of student clinical competence in health-related areas, such as nursing and medicine, has been measured by an OSCE (Harden 2015). Implementing this assessment format in health-related disciplines emerged due to the perception that OSCEs are consistent, reliable and valid (Rushforth 2007). Many education programs also utilise OSCEs due to their format and objective nature (Nasir et al. 2014). Furthermore, it is widely recognised that OSCEs provide a specific set of data on a student’s clinical competency that aligns with the effectiveness of clinically-based education programs (Bartfay et al. 2004). It is for these reasons that OSCEs continue to be utilised by many preparatory institutions as an important determining factor in the assessment and ratification of a students’ competency to practice (Harden 2015). Therefore, assessing skill development via an OSCE is considered effective in identifying skill deficits and can provide a reliable evaluation of the students’ clinical performance.

Traditionally, an OSCE format comprised of a series of practical-based stations designed to test the clinicians’ procedural and data interpretation skills in an applied clinical context (Harden 1988). Early studies on OSCEs identified the need to constantly amend or consider the OSCE format to ensure the quality and success of this assessment (Bartfay et al. 2004). Today, the structure of OSCE assessments and formats range from a single station, four or five stations, to 20 stations or more (McKinley et al. 2008). These stations could involve a variety of different assessment items (e.g., written questions, oral questions, clinical patient presentations and scenarios) or a combination of various formats (Harden 2015). The practical aspect of OSCE assessments can be emphasised using various techniques, such as simulated patients, videotaped interviews or written and verbal reports of patient histories (Harden 2015). There is an extensive history of simulation-based education and assessment. Still, it is an area of ongoing research and development for associated health-related disciplines, and the process of improving its effectiveness as a tool continues to be investigated (Jansson et al. 2013).

When developing an assessment item, such as an OSCE, academic staff must consider the intended learning outcomes for that particular assessment and ensure that they are disseminated to the student. However, the issue of reliability and validity can be problematic concerning the format of an OSCE. Indeed, it has been argued that an appropriate level of attention to the assessment design is required for the level of reliability and validity to acceptable (Carraccio & Englander 2000). A reoccurring theme in the literature from relevant health fields on the reliability and validity of the OSCE format indicates that reliability is reflective of the number of stations or posts included in the examination (Mitchell et al. 2009). Further, Pell et al. (2012) concluded that an OSCE assessment with a high number of assessable components was typically more reliable than one that only measured a limited number of clinical competencies. Hastie et al. (2014) also found that a larger number of stations, utilising an array of clinical scenarios, applying skills, incorporating standardised checklists, and providing variation in the examiner(s) marking allowed the student to display a more accurate presentation of their clinical competence.

The notion of reliability depends significantly on the ability to reproduce the assessment consistently with minimal changes or modifications. Mitchell et al. (2009) claim that four areas of development must be considered to develop reliability and validity within the OSCE assessment. These areas include (1) measuring skills integration; (2) measuring the student’s professional behaviour; (3) the ability to differentiate between performance and competence; (4) ensuring that measuring clinical competence is done in a context-reliant environment. Adamson et al. (2013) develop these concepts further by placing them in a context of different assessment styles, such as short-duration stations and computer-based simulations.

The original design of OSCEs for paramedicine was based on multiple stations (Harden 1988). However, in more recent years, the stations have been modified to include a range of scenarios where the clinician is required to diagnose and manage a patient using ‘hard’ technical, clinical skills and ‘soft’ skills, such as effective communication (Von Wyl et al. 2009). O’Brien et al. (2013) found the use of simulated training models in the development of an OSCE assessment had led to an improvement in learning and the assessor’s ability to evaluate the participant’s clinical competence. Moreover, the purpose of the assessment is to allow the participant to demonstrate their knowledge of the patient’s condition and evidence of their clinical skills.

Current evidence suggests that a systematic approach to student assessment feedback should be maintained to improve the quality of overall student experience (Grebennikov & Shah 2013). There are many similarities between the various health-related professions. Still, it is important to remember that each discipline has its own specific roles and responsibilities to consider. One area that seems to have been largely overlooked by current researchers in paramedicine is the perception of students regarding the effectiveness, reliability and validity of OSCE assessments (Harden 2015). Therefore, it is essential to gather the appropriate information regarding the perception of educational assessment methodologies, such as the OSCE, as it pertains to paramedicine students. A study was conducted that specifically included paramedicine students' perceptions and academics developing and facilitating the assessments in a paramedicine education program.

Paramedic students and paramedic academics across two Australian universities, each offering a Bachelor’s of Paramedicine, were approached for this study. All paramedic students were in their final year of a Bachelor’s of Paramedicine degree. All paramedic academics taught in a Bachelor’s of Paramedicine programme. A voluntary, opt-in, anonymous online survey was administered to paramedic students and academics. An online survey method is appropriate since surveys are well-suited to studies aiming to investigate the descriptive aspect of a situation. Surveys enable the exploration of different aspects of a given situation or for hypothesis testing (Farmer et al. 2016). Each of these areas has been carefully considered during the design and development of this project (Kelley et al. 2003). Demographics played no significant role in the results between the responses of students enrolled in the single degree program and the students undertaking the dual degree program. Also, there was an equal mix of age and gender.

The survey was generated using the qualtrix software program and was designed using a set of five-point bipolar Likert scale questions. This format enabled the development of a standardised form that could then be used to collect data from a generalisable sample of individuals (Joshi et al. 2015). The Likert scale questions measured participants’ perspectives of a variable from ‘strongly agree’ to ‘strongly disagree’ on a five-point scale. The students and staff had access to the online survey via a university intranet link for two weeks. The links were distributed via email or intranet messaging. There was no direct interaction between the principal researcher and the respondents regarding the content of the questionaries. The responses were analysed and compared for similarities and differences via statistical analysis with the SPSS software tool. An exploratory data analysis method was utilised to construct a correlation matrix to explore relationships between the variables gathered in the dataset. Significant correlations were identified, and relationships were validated using t-tests. Significant relationships were revised into a 3D graph form to provide a representation (DuToit et al. 2012). The survey questions were:

The short and focused survey questions were piloted on three academics and three students to determine whether the questions were easily understood and could yield the data sought. No adjustments were required.

The ethics approval for this research was obtained from the Human Research Ethics Committee of the University of Southern Queensland. The ethics approval number is H16REA020. Further permission was granted to access students from the Head of School, Nursing, Midwifery and Paramedicine, Australian Catholic University.

The total student population response was n=108 from a total population of n=270 (40%). The academic population response was 37 from a total population of 110 (33.6%). Of the student population, 69 (65.7%) were female. Thirteen (35.1%) academic staff members were also female. Two subgroups of the student population were identified based on whether they were studying a single degree in paramedicine or a double degree in paramedicine and nursing. From this group, 47 (44.8%) were studying a single paramedicine degree. The majority of academic staff had been teaching for more than one year, with only three (8.1%) having started in the academic field in the past year. Students were asked to state their current grade point average (GPA) with 96 (92.3%) of the students having GPAs between 4.1 and 6.0. Of this group, the largest subset, 38 (36.5%) belonged to the GPA grouping of 5.1 and 5.5.

Responses ranked OSCEs as an assessment item compared to seven other assessment forms commonly used in tertiary education. The most preferred assessment task is ranked one.

How do students’ preferences of the OSCE format compare to other assessment modes?

Table 1. Student preferred assessment formats

|

Preference |

N Statistic |

Mean Statistic |

Std. Deviation Statistic |

Skewness |

Rank |

|

|

Statistic |

Std. Error |

|||||

|

MCQ |

105 |

1.61 |

.766 |

1.849 |

.236 |

1 |

|

Written |

109 |

2.31 |

.950 |

.722 |

.231 |

2 |

|

Essay |

107 |

2.54 |

1.066 |

.530 |

.234 |

3 |

|

Portfolio |

107 |

2.76 |

1.123 |

.129 |

.234 |

4 |

|

OSCE |

109 |

2.77 |

1.296 |

.308 |

.231 |

5 |

|

Oral |

108 |

3.22 |

1.210 |

-.213 |

.233 |

6 |

|

Group |

108 |

3.70 |

1.079 |

-.338 |

.233 |

7 |

|

Valid N (listwise) |

101 |

|||||

Do student paramedics consider anxiety as a barrier to their performance on the OSCE?

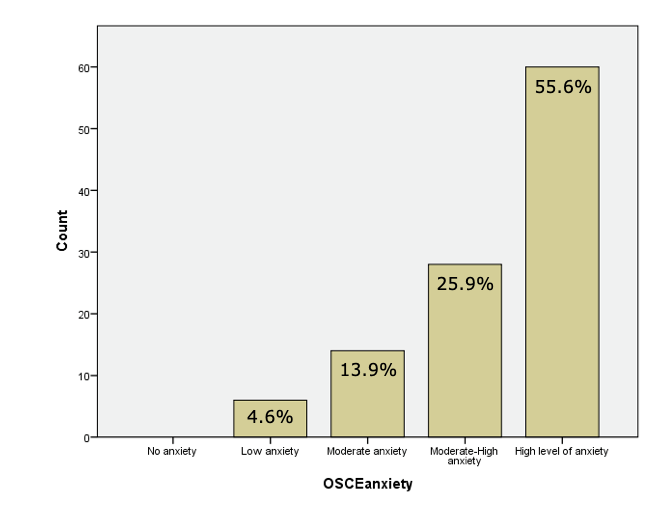

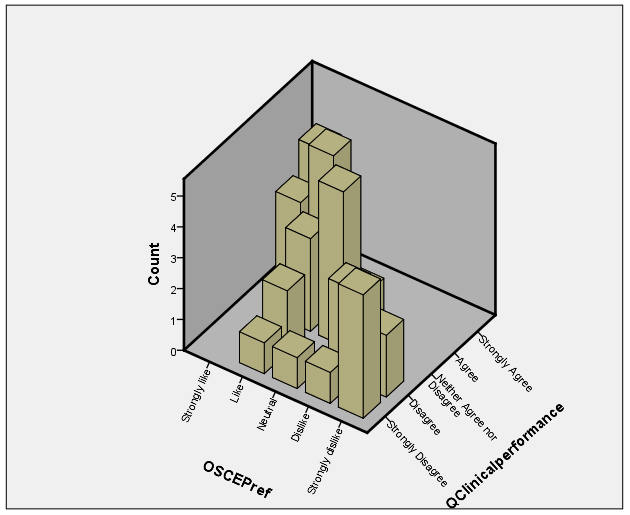

The following data reflects the students’ perceived level of anxiety when having to undertake an OSCE (Figure 1) correlated with the students’ level of perceived anxiety compared to their preference for OSCEs as an assessment task.

Figure 1. Level of anxiety associated with the OSCE

Findings concerning whether the student participants perceived anxiety as a barrier to their performance, indicated a strong, positive correlation between the two variables (r =.596, n = 108, p <0.001, CD = 35.5%). The result was statistically significant, evidencing the hypothesis that students consider anxiety a barrier to their performance in OSCE assessments. Therefore, regardless of the students’ preference for OSCEs, participants were most likely to associate a high level of anxiety in participating in the assessment. For example, 50% of the population either ‘liked’ or ‘strongly liked’ OSCEs as an assessment item. Additionally, 13% of participants preferred an OSCE’s format for assessments, even though they had a very high level of anxiety associated with undertaking the assessment. Notably, 31% preferred the OSCE as an assessment, even though they had a moderate to high level of anxiety associated with the assessment. An equal number (31%) disliked or strongly disliked the OSCE as an assessment preference.

Some students perceived the OSCE as providing an accurate measure of their level of clinical performance despite having high levels of anxiety. Students that reported a lower level of anxiety towards OSCEs were more likely to believe OSCEs are an accurate measure of their clinical performance. Meanwhile, students with a higher level of anxiety were less likely to believe it is an accurate measure. This data is particularly relevant compared to the perceptions of staff revealed in the following question.

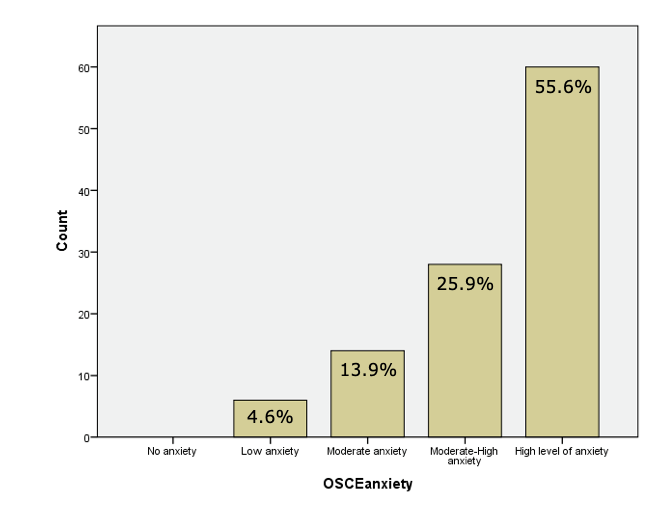

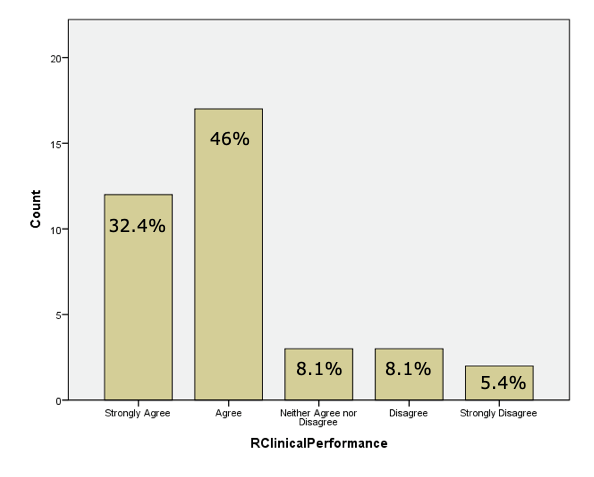

Are the academic staff perceptions of the intended outcomes of OSCE assessments consistent with those of the students?

The results were varies concerning whether academic staff perceptions of the intended outcomes of OSCE assessments are consistent with those of the student paramedics participants (see Figure 2 and 3).

Figure 2. Academic perception of OSCE format

Figure 3. Student perception of OSCE format

Figure 2 and Figure 3 show that academics appeared to believe that the outcome of an OSCE best reflects the students’ clinical performance compared to other assessment strategies. However, the student’s perception of whether an OSCE reflects their clinical performance is more widely distributed.

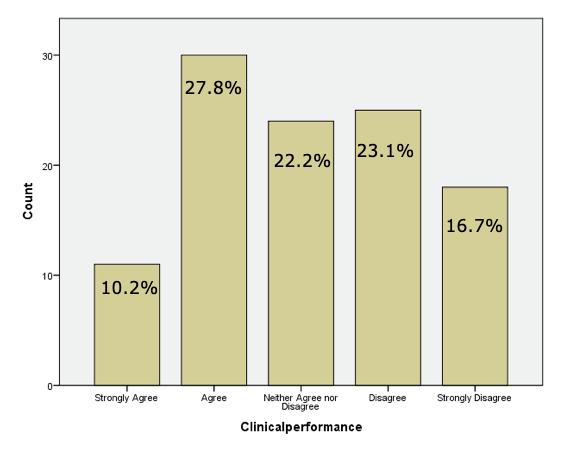

Do students perceive the OSCE format as an accurate indicator of their level of clinical performance?

There is a strong, positive correlation between the two variables r =.686, n = 109, p <0.001, CD = 47.05%, indicating a significant relationship between them. This result is statistically significant and evidences the hypothesis that students perceive the OSCE format as an accurate indicator of their level of clinical performance. As observed in Figure 4, there appears a clear relationship between the students’ preference to undertake an OSCE assessment and their belief that the OSCE is an accurate measure of their clinical performance. Students who ‘agree’ or ‘strongly agree’ that the OSCE provides an accurate measure of their clinical performance are most likely to ‘agree’ or ‘strongly agree’ with a preference for an OSCE assessment.

Figure 4. Preference for the OSCE vs. Measure of Clinical Performance

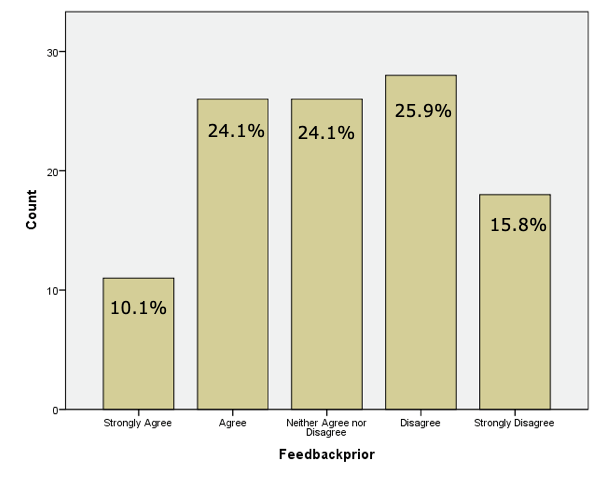

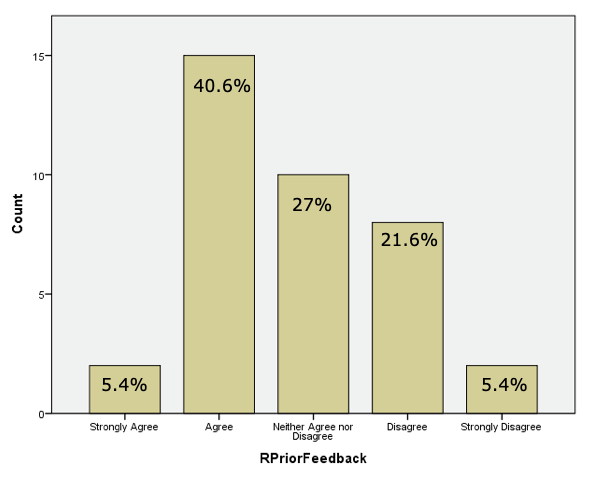

Is the perception of students and academic staff that the feedback provided before OSCE assessments adequate?

The following figures reflect whether students perceive they received sufficient instructions and information before an OSCE. Most students are either ‘neutral’ or ‘disagree’ that the level of feedback provided before an OSCE is adequate (Figure 5). However, Figure 6 indicates that educators facilitating OSCE assessments are more likely than the student to believe that the level of feedback provided pre-OSCEs is adequate. The differences in students’ perceptions from that of educators are reflected in several variables considered by the study. This is perhaps one of the most significant barriers to improving student perception of the reliability and validity of the OSCE format.

Figure 5. Student perception of feedback before an OSCE

Figure 6. Academic perception of feedback before OSCE

Overall, a correlation exists between the students’ perception of whether they received adequate feedback to prepare for OSCE, compared to perceptions of whether the OSCE is an accurate measure of their clinical performance. Results indicate there is a moderate, positive correlation between the two variables (r = 0.450, n = 108, p < 0.001, CD = 20.25%). This result is statistically significant and provides evidence that the more positively the student perceives the level of feedback provided on performance before the OSCE, the more strongly they agree that the OSCE is an accurate measure of their clinical performance.

Findings from this study revealed that, although academic staff consider OSCEs an assessment methodology that best reflects a student’s clinical performance, the students’ perception of the OSCE is more widely distributed. Finally, there is a strong correlation between the student’s perception of the reliability and validity of the OSCE format as a measure of clinical performance and the two variables (anxiety associated with the assessment and adequacy of feedback before the assessment). However, there exist different perceptions regarding preparation information before an OSCE.

The movement of paramedicine education into the tertiary sector necessitates evidence-based practice content to effectively contribute to learning and teaching processes and outcomes (Donaghy 2018). The need for an increased level of evidence to support learning and teaching is most significant concerning assessment strategies and practices (Harden 2015).

Most participants rated their anxiety levels associated with the OSCE as either high or moderately high. This finding is consistent with the levels of anxiety indicated by physical therapy doctoral students when participating in OSCE assessments (Swift et al. 2013). Thirty-one per cent of the participants correlated this high level of anxiety to a ‘dislike’ for the OSCE’s format. Another 31% of the cohort stated that, while the OSCE elicited a high level of anxiety, they preferred the OSCE as an assessment format. Dahwood et al.’s research (2016) reported that academic staff could help reduce exam anxiety through techniques, such as group work, before exams and by ensuring that students had access to student advisors and support systems. However, the key recommendation by Dahwood et al. (2016) is that there was a need for students to maintain a high level of physical and mental health before and during exam periods to promote academic performance. Notably, the reason for exam anxiety was not this study’s focus. However, this could form the basis of future research into the factors contributing to the perceptions of students undertaking OSCEs.

Findings also indicated that 50% of the student cohort preferred OSCEs as a method of assessment, even though it produced high levels of anxiety. As such, it could be argued that, instead of anxiety being a barrier to a student’s performance in an OSCE, anxiety could be a variable that creates a positive perception of the OSCE as an effective assessment methodology. This phenomenon has been observed in Midwifery research of a similar nature (Jay 2007). However, anxiety levels as a barrier to success must be considered to improve students’ perception of the reliability and validity of the OSCE as an assessment. By reducing students’ levels of anxiety while maintaining their perception of the format as a positive assessment method, it might be possible to increase student confidence in its effectiveness.

Comparative studies in other health-related professions also found heightened levels of anxiety in students when performing an OSCE assessment task. However, no significant difference in performance outcome was indicated (Brand & Schoonheim‐Klein 2009). However, findings indicate that the students’ high levels of anxiety influence their perception of the format as an accurate measure. Moreover, the lower the student’s level of anxiety, the more likely they are to agree that an OSCE is an accurate measure of their clinical performance. Thus, if it was possible to reduce the high levels of anxiety students’ associate with the OSCE, the perceptions of the students could be more positive and align more closely with academics’ almost universal perception of the OSCE as an effective assessment method.

Student participants, who were more positive about the OSCE and its ability to measure clinical ability, were more likely to agree that it is an effective measure of their clinical performance. This strong, positive relationship was also evident in the students’ preference for an OSCE as an assessment item and whether they believe the format provides a better illustration of their clinical competence than other assessment types. The stronger the participants’ preference for the OSCE as an assessment item, the more strongly they agreed that the OSCE was a better measure of their clinical competence compared to other assessment formats. This series of strong positive relationships imply that the paramedic students involved in this study perceived the OSCE as an accurate indicator of their clinical performance. Furthermore, this finding is consistent with previous studies in other health disciplines regarding the effectiveness of an OSCE as a measure of clinical performance (Graham et al. 2013).

Another area of consideration was whether the academic staff perceptions of the OSCE’s intended outcomes were consistent with those of the student cohort. Consistency, in relation to perception, is important. Tavares et al. (2013) concluded that student acceptance of the OSCE format as being reliable needed to include factors, such as standardisation and assessor objectivity. The study’s results indicated that student perceptions do not always align closely with those of the academics facilitating the assessment. The academic staff involved in this study considered the OSCE as the best reflection of a student’s clinical performance. However, the students’ perceptions of the OCSE as an effective measure were more widely distributed, although the reason for these perceptions was not a component of this study.

Results indicated that students had a difference of opinion on the adequacy of feedback when compared to the perception of the academics involved with the assessment. However, the more strongly students believed that they received adequate feedback during their preparation before the OSCE, the more inclined students were to agree that an OSCE is an accurate measure of their clinical performance. As such, the importance of effective feedback should be considered vital for the development of clinical assessments in health-specific professions, such as paramedicine (Lefroy et al. 2015). Students rated multiple-choice questions (MCQ) as the most preferred assessment strategy and group activities as the least preferred assessment item. The reason for this preference for MCQs was not investigated in this study. Further, students that participated in this study only ranked the OSCE fifth in preference out of seven assessment methodologies surveyed.

Notably, Van De Ridder et al. (2008) indicated that the quantity and quality of feedback provided both before the assessment was a key factor in the students’ perception of success. Students were less likely to agree that the level of feedback provided is adequate before assessment situations. This indicates that the academics have a potentially different perception of what equates to the provision of an adequate level of feedback. This requires further investigation since the students’ perception of preparation for the assessment could be considered as the primary indicator of success.

The principal limitation was that the researcher was a staff member of one of the universities and potentially had direct or indirect contact with the student and academic cohort during the survey’s period. Any contact was a part of the course’s formal curriculum, and there was no discourse regarding the survey data between the academic and students at any time (surveys were submitted anonymously). No data was collected on entry-level scores for the degrees at the two universities, and no data was collected about whether students’ GPAs influenced assessment preferences.

The increase in discipline-specific investigations of educational methodologies is an important development in the ongoing improvement in delivering effective learning and teaching in the discipline of paramedicine. Of key significance is that students and academics perceived OSCEs to be a valid and reliable measure of the student clinical performance. There was a difference in perception of the effect of variables, such as adequate levels of feedback before assessment. Still, the comparison between the academics’ and students’ perceptions in this study warrants further investigation. The students indicated heightened levels of anxiety when undertaking an OSCE style assessment. However, students also indicated that this increased level of anxiety gave them a more accurate measure of the perception of their clinical performance when compared to alternative assessment methods. When considering the importance of practical clinical assessment in the students’ ability to demonstrate their clinical competence, the OSCE format appears to enable students to most effectively ‘show how’ compared to alternate assessments. The ability of a well-designed OSCE to facilitate students to apply clinical knowledge in a standardised setting could improve its value as an assessment tool. Finally, although an OSCE is considered an accurate measure of students’ clinical performance, improving the perception of the assessment format’s reliability and validity could result in improved student confidence in the format and its efficiency as a measure of clinical performance.

The authors acknowledge the assistance of the paramedicine departments from the Australian Catholic University (ACU) and the Queensland University of Technology (QUT). We particularly mention Mr Peter Horrocks (QUT) for his assistance in disseminating the surveys and Mr Brendan Hall (ACU) for his invaluable assistance with the statistical analysis.

Adamson, KA Kardong-Edgren, S & Willhaus, J 2013, ‘An updated review of published simulation evaluation instruments’, Clinical Simulation In Nursing, vol. 9, no. 9, pp. 393–e400.

Bartfay, WJ Rombough, R Howse, E & LeBlanc, R 2004, ‘The OSCE approach in nursing education: Objective structured clinical examinations can be effective vehicles for nursing education and practice by promoting the mastery of clinical skills and decision-making in controlled and safe learning environments’, The Canadian Nurse, vol. 100, no. 3, pp.18.

Brand, HS & Schoonheim‐Klein, M 2009, ‘Is the OSCE more stressful? Examination anxiety and its consequences in different assessment methods in dental education’, European Journal of Dental Education, vol. 13, no. 3, pp.147–153.

Carraccio, C & Englander, R 2000, ‘The objective structured clinical examination: A step in the direction of competency-based evaluation’, JAMA Pediatrics, vol. 154, no. 7, pp. 736–41.

Dahwood, E Ghadeer, HA, Mitsu, R Almutary, N & Alenez, B 2016, ‘Relationship between test anxiety and academic achievement among undergraduate nursing students’, Journal of Education and Practice, vol. 7, no. 2, pp. 57–65.

Donaghy, J 2008, ‘Higher education for paramedics—Why?’, Journal of Paramedic Practice, vol. 1, no. 1, pp. 31–5

Donaghy, J. (2018). Continuing Professional Development: Reflecting on our own professional values and behaviours as paramedics. Journal of Paramedic Practice, 10(4), 1-4.

DuToit, SH Steyn, AGW & Stumpf, RH 2012, Graphical exploratory data analysis. Springer Science & Business Media.

Farmer, R Oakman, P & Rice, P 2016, ‘A review of free online survey tools for undergraduate students’, MSOR Connections, vol. 15, no. 1, pp. 71–78.

Graham, R Zubiaurre Bitzer, LA & Anderson, OR 2013, ‘Reliability and predictive validity of a comprehensive preclinical OSCE in dental education’, Journal of Dental Education, vol. 77, no. 2, pp.161–167.

Grebennikov, L & Shah, M 2013, ‘Monitoring trends in student satisfaction’, Tertiary Education and Management, vol. 19, no. 4, pp. 301–322.

Harden, RM 2015, ‘Misconceptions and the OSCE’, Medical Teacher, vol. 37, no. 7, pp. 608–610.

Harden, RM 1988, ‘What is an OSCE?’, Medical Teacher, vol. 10, no. 1, pp. 19–22.

Hastie, MJ Spellman, JL Pagano, PP Hastie, J & Egan, BJ 2014, ‘Designing and implementing the objective structured clinical examination in anesthesiology’, Anesthesiology, vol. 120, no. 1, pp.196–203.

Jansson, M, Kääriäinen, M & Kyngäs, H 2013, ‘Effectiveness of simulation-based education in critical care nurses' continuing education: A systematic review’, Clinical Simulation in Nursing, vol. 9, no. 9, pp. e355–e60.

Jay, A. 2007, ‘Students' perceptions of the OSCE: a valid assessment tool?’, British Journal of Midwifery, vol. 15, no. 1, pp.32–37.

Joshi, A Kale, S Chandel, S & Pal, DK 2015, ‘Likert scale: Explored and explained’, Current Journal of Applied Science and Technology, pp.396–403.

Kelley, K Clark, B Brown, V & Sitzia, J 2003, ‘Good practice in the conduct and reporting of survey research’, International Journal for Quality in Health Care, vol. 15, no. 3, pp. 261–266.

Krishnan, A 2009, ‘What are academic disciplines? Some observations on the disciplinarity vs. interdisciplinarity debate’, in ESRC National Centre for Research Methods (ed.), National Centre for Research Methods, pp. 1–58.

Lefroy, J Watling, C Teunissen, PW & Brand, P 2015, ‘Guidelines: The do’s, don’ts and don’t knows of feedback for clinical education’, Perspectives on Medical Education, vol. 4, no. 6, pp. 284–299.

McKinley, RK Strand, J Gray, T Schuwirth, L Alun-Jones, T & Miller, H 2008, ‘Development of a tool to support holistic generic assessment of clinical procedure skills’, Medical Education, vol. 42, no. 6, pp. 619–627.

Mitchell, ML Henderson, A Groves, M Dalton M & Nulty D 2009, ‘The objective structured clinical examination (OSCE): Optimising its value in the undergraduate nursing curriculum’, Nurse Education Today, vol. 29, no. 4, pp. 398–404.

Nasir, AA Yusuf, AS Abdur-Rahman, LO Babalola, OM Adeyeye, AA & Popoola, AA 2014, ‘Medical students’ perception of objective structured clinical examination: A feedback for process improvement’, Journal of Surgical Education, vol. 71, no. 5, pp. 701–6.

O’Brien, K Moore, A Hartley, P & Dawson, D 2013, ‘Lessons about work readiness from final year paramedic students in an Australian university’, Australasian Journal of Paramedicine, vol. 10 no. 4.

O’Brien, K. Moore, A. Dawson, D & Hartley, P 2014, ‘An Australian story: Paramedic education and practice in transition’ Australasian Journal of Paramedicine, vol. 11, no. 3, pp. 77.

O'Meara, PF Furness, S & Gleeson, R 2017, ‘Educating paramedics for the future: A holistic approach’, Journal of Health & Human Services Administration, vol. 40, no. 2, pp. 219–51.

Pell, G Fuller, R Homer, M & Roberts, T 2012, ‘Is short-term remediation after OSCE failure sustained? A retrospective analysis of the longitudinal attainment of underperforming students in OSCE assessments’, Medical Teacher, vol. 34, no. 2, pp. 146–50.

Rushforth, HE 2007, ‘Objective structured clinical examination (OSCE): Review of literature and implications for nursing education’, Nurse Education Today, vol. 27, no. 5, pp.481–90.

Sheen, L 2003, ‘Developing an objective structured clinical examination for ambulance paramedic education - Introducing an objective structured clinical examination (OSCE) into ambulance paramedic education’, 12th annual Teaching and Learning Forum, Edith Cowan University, Perth.

Swift, M Spake, E & Gajewski, BJ 2013, ‘Student performance and satisfaction for a musculoskeletal objective structured clinical examination’, Journal of Allied Health, vol. 42, no. 4, pp. 214–22.

Tavares, W Boet, S Theriault, R Mallette, T & Eva, KW 2013, ‘Global rating scale for the assessment of paramedic clinical competence’, Prehospital Emergency Care, vol. 17, no. 1, pp. 57–67.

Van De Ridder, JM Stokking, KM McGaghie, WC & Ten Cate, OTJ 2008, ‘What is feedback in clinical education?’, Medical Education, vol. 42, no. 2, pp.189–197.

Von Wyl, T Zuercher, M Amsler, F Walter, B & Ummenhofer, W 2009, ‘Technical and non‐technical skills can be reliably assessed during paramedic simulation training’, Acta Anaesthesiologica Scandinavica, vol. 53, no. 1, pp.121–127.

Williams, B Onsman, A & Brown, T 2009, ‘From stretcher-bearer to paramedic: The Australian paramedics’ move towards professionalisation’, Journal of Emergency Primary Health Care. vol. 7, no. 4.